Microsoft announced on Tuesday the release of Phi-3-mini, a new, more affordable artificial intelligence (AI) model. This lightweight model is designed to offer similar capabilities as its predecessors but at a fraction of the cost. Sébastien Bubeck, Microsoft’s Vice President of GenAI Research, emphasised the economic advantages of the new model. “Phi-3 is not just slightly cheaper; it’s dramatically cheaper. We’re looking at a cost reduction of about tenfold compared to other models in the market with comparable capabilities,” Bubeck stated during the launch, reported Reuters.

What Can Phi-3-mini Do?

The Phi-3-mini is part of Microsoft’s broader initiative to introduce a series of small language models (SLMs), which are tailored for simpler tasks, making them ideal for businesses with fewer resources.

This approach is part of the tech giant’s strategic bet on a technology they believe will significantly influence global industries and workplace dynamics.

Microsoft claimed that Phi-3-mini measures at 3.8 billion parameters, which can perform better than models twice its size.

How Is LLM Different From SLM?

One of the key features of the SLM-based Phi-3-mini is its compact size, which allows it to operate efficiently on local machines without the need for extensive processing power.

Unlike larger language models (LLMs) that require multiple parallel processing units, the Phi-3-mini can generate data quickly and with minimal hardware, making it accessible for smaller companies and individual users.

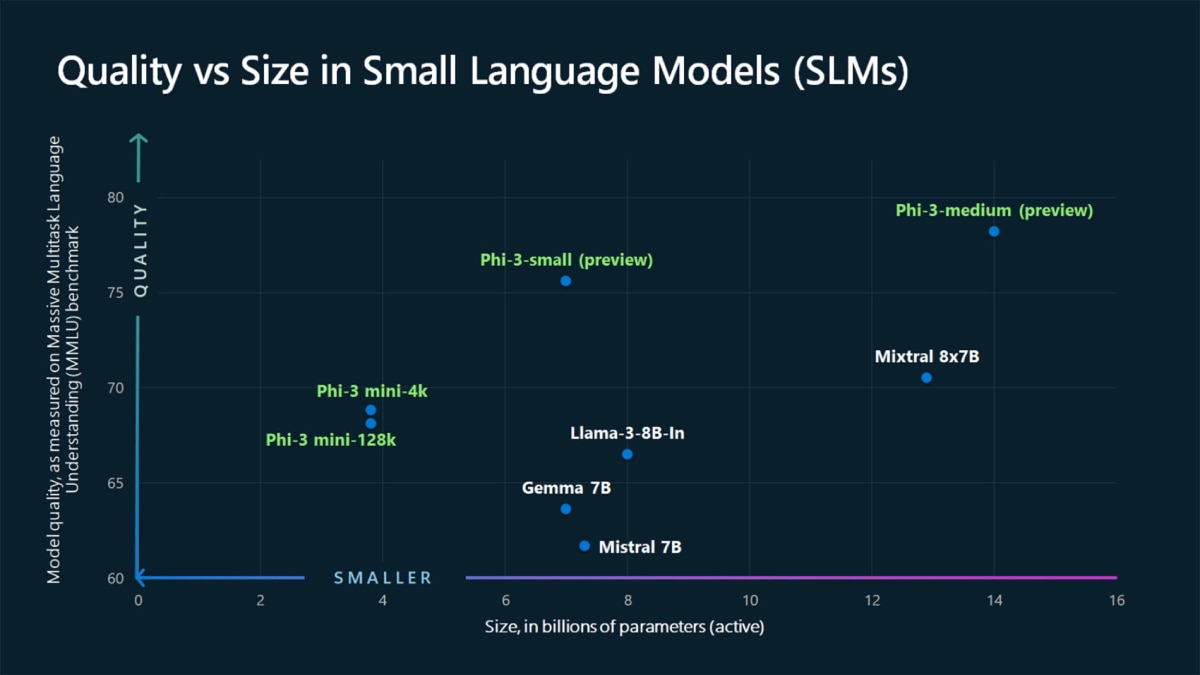

Graphic illustrating how the quality of new Phi-3 models, as measured by performance on the Massive Multitask Language Understanding (MMLU) benchmark, compares to other models of similar size. (Courtesy: Microsoft)

Explaining the efficiency of SLMs, Jaspreet Bindra, Founder, TechWhisperer UK Limited, told ABP Live, “It is a part of Microsoft’s broader initiative to introduce a series of SLMs that are tailored for simpler tasks, making them ideal for businesses with fewer resources.”

“This approach promises to reduce costs, make the models faster as they are on the edge, and promise more enterprise and consumer use cases of Generative AI,” Bindra added.

Where Will Phi-3-mini Be Available?

Microsoft has made the Phi-3-mini available immediately on several platforms, including its own Azure AI model catalogue, the popular machine learning model platform Hugging Face, and Ollama, a framework that facilitates running models on local machines. Furthermore, the model has been optimised for Nvidia’s GPUs and will be supported by Nvidia Inference Microservices (NIM), enhancing its performance and versatility.

This launch follows Microsoft’s recent investment of $1.5 billion in the UAE-based AI firm G42, reflecting its ongoing commitment to expanding its AI footprint globally. The company has also partnered with French startup Mistral AI to integrate its models into the Azure cloud computing platform, reinforcing Microsoft’s position as a leader in AI innovation and accessibility.